K8S calico网络插件问题集,持续更新

calico node pod一直没有起来

Number of node(s) with BGP peering established = 0

网上解决方法如下:

https://blog.csdn.net/qq_36783142/article/details/107912407

- name: IP_AUTODETECTION_METHOD

value: “interface=enp26s0f3”

但此方式不能解决自己环境所遇问题。

分析应该是网络路由问题(原来环境残留的脏路由导致),做下清理处理

执行下面命令解决

1

2

3

4

5

6

| systemctl stop kubelet

systemctl stop docker

iptables --flush

iptables -tnat --flush

systemctl start docker

systemctl start kubelet

|

calico node pod异常

Readiness probe failed: container is not running

现象如下

1

2

3

4

5

| [root@node2 ~]# kubectl get po -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-67f55f8858-5cgpg 1/1 Running 2 14d 10.151.11.53 gpu53 <none> <none>

kube-system calico-node-l6crs 0/1 Running 3 18d 10.151.11.61 node2 <none> <none>

kube-system calico-node-vb7s5 0/1 Running 1 57m 10.151.11.53 gpu53 <none> <none>

|

calico node 异常现象跟上面类似,但是探针检查失败

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Unhealthy 69m (x2936 over 12d) kubelet Readiness probe errored: rpc error: code = Unknown desc = operation timeout: context deadline exceeded

Warning Unhealthy 57m (x2938 over 12d) kubelet Liveness probe errored: rpc error: code = Unknown desc = operation timeout: context deadline exceeded

Warning Unhealthy 12m (x6 over 13m) kubelet Liveness probe failed: container is not running

Normal SandboxChanged 11m (x2 over 13m) kubelet Pod sandbox changed, it will be killed and re-created.

Normal Killing 11m (x2 over 13m) kubelet Stopping container calico-node

Warning Unhealthy 8m3s (x32 over 13m) kubelet Readiness probe failed: container is not running

Warning Unhealthy 4m45s (x6 over 5m35s) kubelet Liveness probe failed: container is not running

Normal SandboxChanged 3m42s (x2 over 5m42s) kubelet Pod sandbox changed, it will be killed and re-created.

Normal Killing 3m42s (x2 over 5m42s) kubelet Stopping container calico-node

Warning Unhealthy 42s (x31 over 5m42s) kubelet Readiness probe failed: container is not running

|

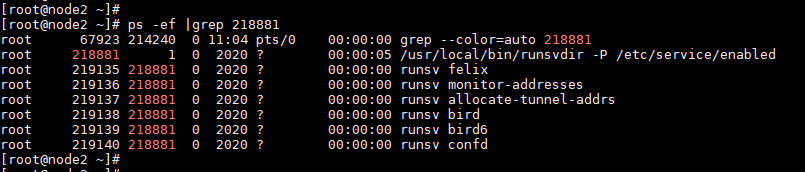

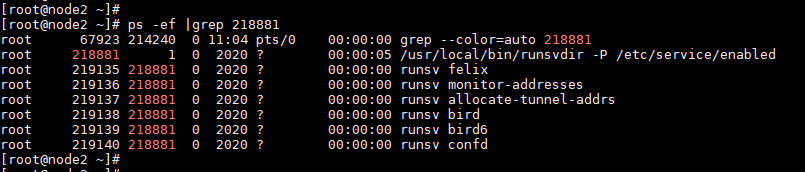

查看异常pod日志信息,发现进程端口被占用。通过netstat命令查看端口占用进程,发现下面进程一直残留

删除calico-node组件,包括kill,上面进程仍然残留

1

2

3

| # 删除calico-node组件

cd /etc/kubernetes/

kubectl delete -f calico-node.yml

|

这些进程为docker启动,但未回收,此时21881进程状态为D - 不可中断的睡眠状态。

通过重启服务器节点,解除calico服务端口占用。问题解决。

有时进程可以进行删除,如下残留进程/usr/local/bin/runsvdir -P /etc/service/enabled,状态为S,其子进程包含了calico相关服务,通过kill命令清理,然后再启动calico-node组件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

| [root@node2 kubernetes]# ps -ef |grep 175885

root 24910 36399 0 16:04 pts/6 00:00:00 grep --color=auto 175885

root 175885 175862 0 15:12 ? 00:00:00 /usr/local/bin/runsvdir -P /etc/service/enabled

root 201783 175885 0 15:29 ? 00:00:00 runsv felix

root 201784 175885 0 15:29 ? 00:00:00 runsv monitor-addresses

root 201785 175885 0 15:29 ? 00:00:00 runsv allocate-tunnel-addrs

root 201786 175885 0 15:29 ? 00:00:00 runsv bird

root 201787 175885 0 15:29 ? 00:00:00 runsv bird6

root 201788 175885 0 15:29 ? 00:00:00 runsv confd

[root@node2 kubernetes]# ps aux |grep 175885

root 25633 0.0 0.0 112712 960 pts/6 S+ 16:05 0:00 grep --color=auto 175885

root 175885 0.0 0.0 4356 372 ? Ss 15:12 0:00 /usr/local/bin/runsvdir -P /etc/service/enabled

[root@node2 kubernetes]#

[root@node2 kubernetes]#

[root@node2 kubernetes]# kill 175885

[root@node2 kubernetes]#

[root@node2 kubernetes]#

[root@node2 kubernetes]# ps -ef |grep calico

root 33242 36399 0 16:11 pts/6 00:00:00 grep --color=auto calico

[root@node2 kubernetes]#

[root@node2 kubernetes]#

|

所以删除calico-node组件时,需要通过ps -ef |grep calico确认节点上是否还有calico相关进程

1

2

3

4

5

6

7

8

9

10

| [root@node2 net.d]#

[root@node2 net.d]# ps -ef |grep calico

root 57982 18990 0 10:54 pts/8 00:00:00 grep --color=auto calico

root 219142 219137 0 2020 ? 00:01:11 calico-node -allocate-tunnel-addrs

root 219143 219135 0 2020 ? 02:25:07 calico-node -felix

root 219144 219136 0 2020 ? 00:01:51 calico-node -monitor-addresses

root 219145 219140 0 2020 ? 00:01:13 calico-node -confd

root 219407 219138 0 2020 ? 00:11:20 bird -R -s /var/run/calico/bird.ctl -d -c /etc/calico/confd/config/bird.cfg

root 219408 219139 0 2020 ? 00:10:59 bird6 -R -s /var/run/calico/bird6.ctl -d -c /etc/calico/confd/config/bird6.cfg

|

附录

检查当前节点的calico网络状态

calico网络成功配置示例:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@node2 kubernetes]# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 192.168.1.11 | node-to-node mesh | up | 08:13:23 | Established |

+--------------+-------------------+-------+----------+-------------+

IPv6 BGP status

No IPv6 peers found.

|

获取k8s node命令

1

2

3

| # run in master node

DATASTORE_TYPE=kubernetes KUBECONFIG=~/.kube/config calicoctl get nodes

|

示例

1

2

3

4

5

| [root@node2 kubernetes]# DATASTORE_TYPE=kubernetes KUBECONFIG=~/.kube/config calicoctl get nodes

NAME

gpu53

node2

|

获取ipPool命令

1

2

3

| # run in master node

DATASTORE_TYPE=kubernetes KUBECONFIG=~/.kube/config calicoctl get ipPool -o yaml

|

示例

1

2

3

4

5

6

| [root@node2 kubernetes]# DATASTORE_TYPE=kubernetes KUBECONFIG=~/.kube/config calicoctl get ipPool -o yaml

apiVersion: projectcalico.org/v3

items: []

kind: IPPoolList

metadata: {}

|