动态pv存储供应(k8s dynamic provisioning and storage) 和 nfs-server-provisioner 原理介绍和功能验证

设计说明

Storage is a critical part of running containers, and Kubernetes offers some powerful primitives for managing it. Dynamic volume provisioning, a feature unique to Kubernetes, allows storage volumes to be created on-demand. Without dynamic provisioning, cluster administrators have to manually make calls to their cloud or storage provider to create new storage volumes, and then create PersistentVolume objects to represent them in Kubernetes. The dynamic provisioning feature eliminates the need for cluster administrators to pre-provision storage. Instead, it automatically provisions storage when it is requested by users. This feature was introduced as alpha in Kubernetes 1.2, and has been improved and promoted to beta in the latest release, 1.4. This release makes dynamic provisioning far more flexible and useful.

What’s New?

The alpha version of dynamic provisioning only allowed a single, hard-coded provisioner to be used in a cluster at once. This meant that when Kubernetes determined storage needed to be dynamically provisioned, it always used the same volume plugin to do provisioning, even if multiple storage systems were available on the cluster. The provisioner to use was inferred based on the cloud environment - EBS for AWS, Persistent Disk for Google Cloud, Cinder for OpenStack, and vSphere Volumes on vSphere. Furthermore, the parameters used to provision new storage volumes were fixed: only the storage size was configurable. This meant that all dynamically provisioned volumes would be identical, except for their storage size, even if the storage system exposed other parameters (such as disk type) for configuration during provisioning.

Although the alpha version of the feature was limited in utility, it allowed us to “get some miles” on the idea, and helped determine the direction we wanted to take.

The beta version of dynamic provisioning, new in Kubernetes 1.4, introduces a new API object, StorageClass. Multiple StorageClass objects can be defined each specifying a volume plugin (aka provisioner) to use to provision a volume and the set of parameters to pass to that provisioner when provisioning. This design allows cluster administrators to define and expose multiple flavors of storage (from the same or different storage systems) within a cluster, each with a custom set of parameters. This design also ensures that end users don’t have to worry about the complexity and nuances of how storage is provisioned, but still have the ability to select from multiple storage options.

Dynamic Provisioning and Storage Classes in Kubernetes

说明:

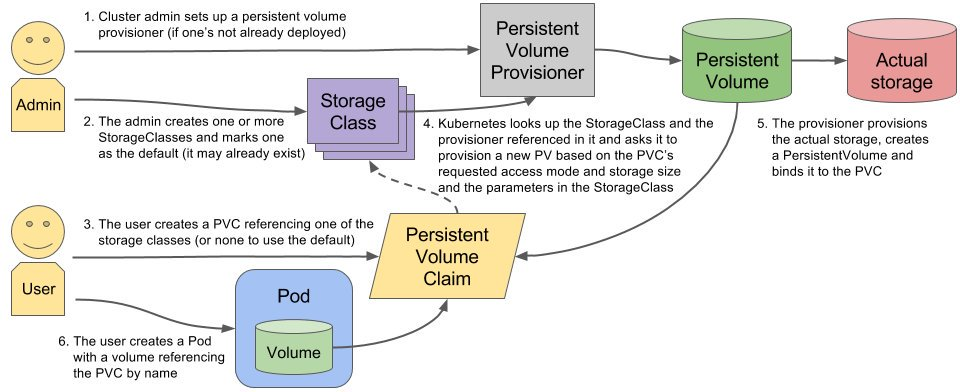

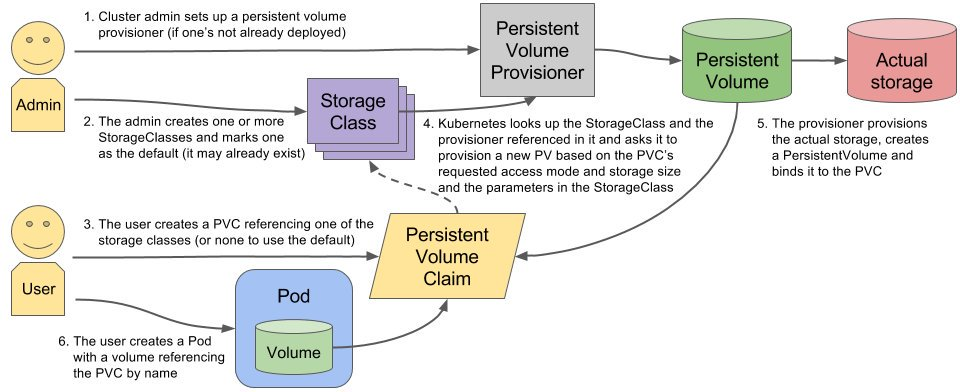

- 管理员只需要按存储类型,预置一些strorage class资源配置(可以理解为创建pv的模板),不需要为每个pvc声明手动创建pv

- 用户按所需strorage class,创建pvc,则系统(这里指的是nfs provisoner)会根据pvc信息,自动创建pv并进行绑定

- 当用户pod删除时,根据需要删除pvc,则绑定的pv会自动关联删除

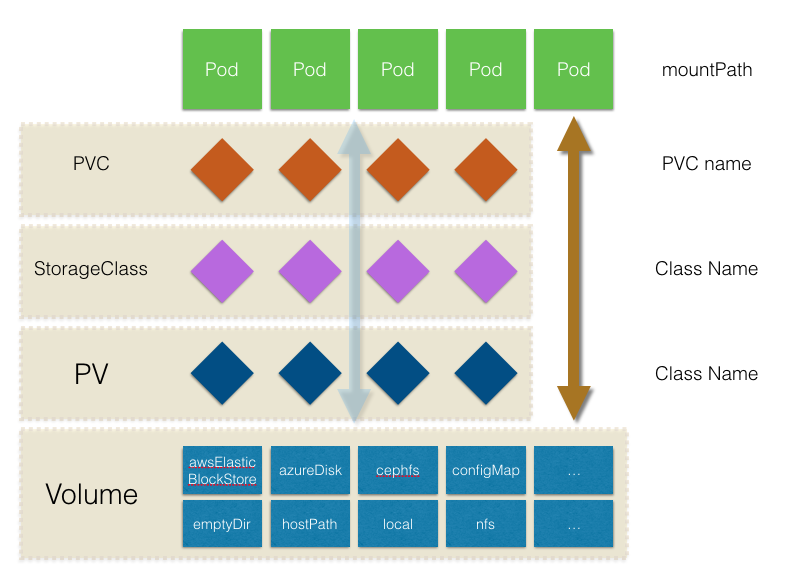

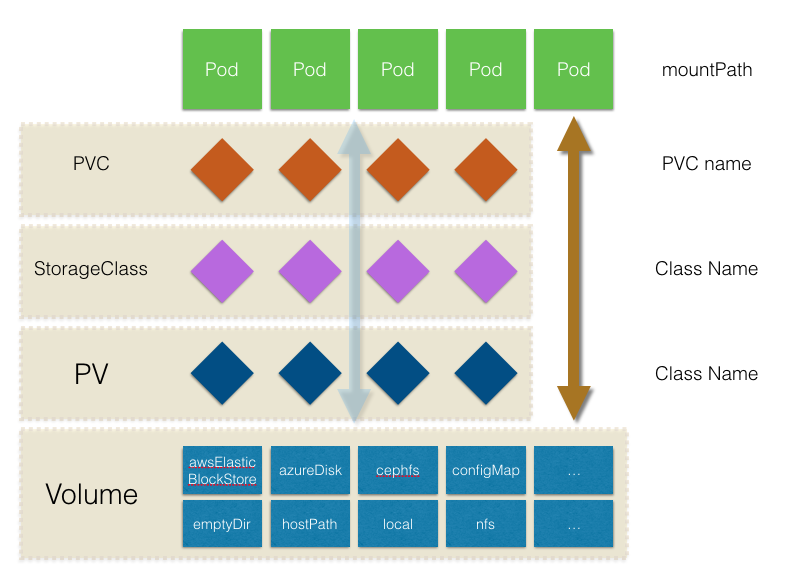

provisioner、pv、pvc 图

资源视图

交互视图

搭建StorageClass+NFS,大致有以下几个步骤:

- 创建一个可用的NFS Serve

- 创建Service Account.这是用来管控NFS provisioner在k8s集群中运行的权限

- 创建StorageClass.负责建立PVC并调用NFS provisioner进行预定的工作,并让PV与PVC建立管理

- 创建NFS provisioner.有两个功能,一个是在NFS共享目录下创建挂载点(volume),另一个则是建了PV并将PV与NFS的挂载点建立关联

nfs-provisioner项目

新代码项目地址:https://github.com/kubernetes-sigs/nfs-ganesha-server-and-external-provisioner

老项目地址(不再使用)https://github.com/kubernetes-retired/external-storage/tree/master/nfs

可使用quay.io/kubernetes_incubator/nfs-provisioner镜像

nfs-provisioner镜像

1

2

| docker pull quay.io/kubernetes_incubator/nfs-provisioner

docker save quay.io/kubernetes_incubator/nfs-provisioner:latest -o nfs-provisioner.img.tar

|

Arguments

- provisioner - Name of the provisioner. The provisioner will only provision volumes for claims that request a StorageClass with a provisioner field set equal to this name.

- master - Master URL to build a client config from. Either this or kubeconfig needs to be set if the provisioner is being run out of cluster.

- kubeconfig - Absolute path to the kubeconfig file. Either this or master needs to be set if the provisioner is being run out of cluster.

- run-server - If the provisioner is responsible for running the NFS server, i.e. starting and stopping NFS Ganesha. Default true.

- use-ganesha - If the provisioner will create volumes using NFS Ganesha (D-Bus method calls) as opposed to using the kernel NFS server (‘exportfs’). If run-server is true, this must be true. Default true.

- grace-period - NFS Ganesha grace period to use in seconds, from 0-180. If the server is not expected to survive restarts, i.e. it is running as a pod & its export directory is not persisted, this can be set to 0. Can only be set if both run-server and use-ganesha are true. Default 90.

- enable-xfs-quota - If the provisioner will set xfs quotas for each volume it provisions. Requires that the directory it creates volumes in ('/export') is xfs mounted with option prjquota/pquota, and that it has the privilege to run xfs_quota. Default false.

- failed-retry-threshold - If the number of retries on provisioning failure need to be limited to a set number of attempts. Default 10

- server-hostname - The hostname for the NFS server to export from. Only applicable when running out-of-cluster i.e. it can only be set if either master or kubeconfig are set. If unset, the first IP output by hostname -i is used.

- device-based-fsids - If file system handles created by NFS Ganesha should be based on major/minor device IDs of the backing storage volume ('/export'). When running a cloud based kubernetes service (like Googles GKE service) set this to false as it might affect client connections on restarts of the nfs provisioner pod. Default true.

存储配额

nfs provisioner xfsQuotaer

通过添加project到目标目录的方式来设置配额大小

实际上还是通过xfsQuotaer 实现

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

// createQuota creates a quota for the directory by adding a project to

// represent the directory and setting a quota on it

func (p *nfsProvisioner) createQuota(directory string, capacity resource.Quantity) (string, uint16, error) {

path := path.Join(p.exportDir, directory)

limit := strconv.FormatInt(capacity.Value(), 10)

block, projectID, err := p.quotaer.AddProject(path, limit)

if err != nil {

return "", 0, fmt.Errorf("error adding project for path %s: %v", path, err)

}

err = p.quotaer.SetQuota(projectID, path, limit)

if err != nil {

p.quotaer.RemoveProject(block, projectID)

return "", 0, fmt.Errorf("error setting quota for path %s: %v", path, err)

}

return block, projectID, nil

}

|

XfsQuotaer 需要系统配置 xfs文件系统挂载参数 prjquota 或则 pquota 参数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

|

type xfsQuotaer struct {

xfsPath string

// The file where we store mappings between project ids and directories, and

// each project's quota limit information, for backup.

// Similar to http://man7.org/linux/man-pages/man5/projects.5.html

projectsFile string

projectIDs map[uint16]bool

mapMutex *sync.Mutex

fileMutex *sync.Mutex

}

// NewNFSProvisioner creates a Provisioner that provisions NFS PVs backed by

// the given directory.

func NewNFSProvisioner(exportDir string, client kubernetes.Interface, outOfCluster bool, useGanesha bool, ganeshaConfig string, enableXfsQuota bool, serverHostname string, maxExports int, exportSubnet string) controller.Provisioner {

var quotaer quotaer

var err error

// 当XfsQuota功能开启时,才能进行配额

if enableXfsQuota {

quotaer, err = newXfsQuotaer(exportDir)

if err != nil {

glog.Fatalf("Error creating xfs quotaer! %v", err)

}

} else {

quotaer = newDummyQuotaer()

}

}

// 构造XfsQuotaer

unc newXfsQuotaer(xfsPath string) (*xfsQuotaer, error) {

if _, err := os.Stat(xfsPath); os.IsNotExist(err) {

return nil, fmt.Errorf("xfs path %s does not exist", xfsPath)

}

isXfs, err := isXfs(xfsPath)

if err != nil {

return nil, fmt.Errorf("error checking if xfs path %s is an XFS filesystem: %v", xfsPath, err)

}

if !isXfs {

return nil, fmt.Errorf("xfs path %s is not an XFS filesystem", xfsPath)

}

entry, err := getMountEntry(path.Clean(xfsPath), "xfs")

if err != nil {

return nil, err

}

// XfsQuotaer 需要系统配置 xfs文件系统挂载参数 prjquota 或则 pquota 参数

if !strings.Contains(entry.VfsOpts, "pquota") && !strings.Contains(entry.VfsOpts, "prjquota") {

return nil, fmt.Errorf("xfs path %s was not mounted with pquota nor prjquota", xfsPath)

}

_, err = exec.LookPath("xfs_quota")

if err != nil {

return nil, err

}

projectsFile := path.Join(xfsPath, "projects")

projectIDs := map[uint16]bool{}

_, err = os.Stat(projectsFile)

if os.IsNotExist(err) {

file, cerr := os.Create(projectsFile)

if cerr != nil {

return nil, fmt.Errorf("error creating xfs projects file %s: %v", projectsFile, cerr)

}

file.Close()

} else {

re := regexp.MustCompile("(?m:^([0-9]+):/.+$)")

projectIDs, err = getExistingIDs(projectsFile, re)

if err != nil {

glog.Errorf("error while populating projectIDs map, there may be errors setting quotas later if projectIDs are reused: %v", err)

}

}

xfsQuotaer := &xfsQuotaer{

xfsPath: xfsPath,

projectsFile: projectsFile,

projectIDs: projectIDs,

mapMutex: &sync.Mutex{},

fileMutex: &sync.Mutex{},

}

return xfsQuotaer, nil

}

|

配额扩容

在storageclass和k8s的默认配置下,通过修改pvc配置文件claim.yaml的配额大小,会报错,报错信息如下。

1

2

3

4

5

6

7

8

9

10

11

12

13

| [root@node131 nfs]# vi deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

[root@node131 nfs]# 编辑size大小

[root@node131 nfs]#

[root@node131 nfs]# kubectl apply -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

Warning: resource persistentvolumeclaims/nfs is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

Error from server (Forbidden): error when applying patch:

{"metadata":{"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"v1\",\"kind\":\"PersistentVolumeClaim\",\"metadata\":{\"annotations\":{},\"name\":\"nfs\",\"namespace\":\"default\"},\"spec\":{\"accessModes\":[\"ReadWriteMany\"],\"resources\":{\"requests\":{\"storage\":\"5Mi\"}},\"storageClassName\":\"example-nfs\"}}\n"}},"spec":{"resources":{"requests":{"storage":"5Mi"}}}}

to:

Resource: "/v1, Resource=persistentvolumeclaims", GroupVersionKind: "/v1, Kind=PersistentVolumeClaim"

Name: "nfs", Namespace: "default"

for: "deploy/kubernetes_incubator_nfs_provisioner/claim.yaml": persistentvolumeclaims "nfs" is forbidden: only dynamically provisioned pvc can be resized and the storageclass that provisions the pvc must support resize

[root@node131 nfs]#

|

参考文档说明内容如下:

StorageClass允许卷扩容

FEATURE STATE: Kubernetes v1.11 [beta]

PersistentVolume 可以配置为可扩容。将此功能设置为 true 时,允许用户通过编辑相应的 PVC 对象来调整卷大小。

当下层 StorageClass 的 allowVolumeExpansion 字段设置为 true 时,以下类型的卷支持卷扩展。

此功能仅可用于扩容卷,不能用于缩小卷。

注意,文档中没有说明nfs卷可以扩容,需要测试验证,测试验证如下

编辑 StorageClass ,添加 allowVolumeExpansion

1

2

3

4

5

6

7

8

9

10

11

| kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: example-nfs

provisioner: example.com/nfs

# 允许卷扩容

allowVolumeExpansion: true

mountOptions:

- vers=4.1

|

执行更新扩容为5M操作,发现pvc仍未更新

查看pvc的打印,如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

| [root@node131 nfs]# kubectl apply -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

persistentvolumeclaim/nfs unchanged

[root@node131 nfs]# kubectl describe pvc

Name: nfs

Namespace: default

StorageClass: example-nfs

Status: Bound

Volume: pvc-1f9f7ceb-6ca8-453e-87a0-013e53841fad

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: example.com/nfs

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 1Mi

Access Modes: RWX

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning ExternalExpanding 40m volume_expand Ignoring the PVC: didn't find a plugin capable of expanding the volume; waiting for an external controller to process this PVC.

Warning ExternalExpanding 2m52s volume_expand Ignoring the PVC: didn't find a plugin capable of expanding the volume; waiting for an external controller to process this PVC.

[root@node131 nfs]#

|

根据上面 提示,查看controller是否处理了pvc resize操作。

查看kube-controller的打印,如下

1

2

3

| I0223 07:31:30.381326 1 expand_controller.go:277] Ignoring the PVC "default/nfs" (uid: "1f9f7ceb-6ca8-453e-87a0-013e53841fad") : didn't find a plugin capable of expanding the volume; waiting for an external controller to process this PVC.

I0223 07:31:30.381389 1 event.go:291] "Event occurred" object="default/nfs" kind="PersistentVolumeClaim" apiVersion="v1" type="Warning" reason="ExternalExpanding" message="Ignoring the PVC: didn't find a plugin capable of expanding the volume; waiting for an external controller to process this PVC."

|

原因 nfs并不支持在线动态扩容操作,即在storageclass条件下,通过修改pvc,同步联动修改pv

说明:

k8s从1.8版本开始支持PV扩容操作。目前glusterfs、rbd等几种存储类型已经支持扩容操作,按官方文档并未包含nfs存储。

PV支持扩容需要满足两个条件:

- PersistentVolumeClaimResize插件使能,apiserver启动参数 –enable-admission-plugins中添加 PersistentVolumeClaimResize

- StorageClass allowVolumeExpansion设置为true

当这两个条件达到之后,用户可以修改PVC的大小从而驱动底层PV的扩容操作。对于包含文件系统的PV,只有当新Pod启动并且以读写模式挂载该PV时才完成文件系统扩容。也就是说,当PV已经挂载在某个Pod时,需要重启该Pod才能完成文件系统扩容。目前支持支持扩容的文件系统包括Ext3/Ext4、XFS。

以上内容总结了k8s官方文档对PV扩容的描述。接下来我们研究PersistentVolumeClaimResize admission plugin。

从代码中分析PersistentVolumeClaimResize Plugin的Validate操作比较简单,分为以下几个步骤:

- 验证PVC对象是否有效;

- 对比期望size和之前size,确认是否需要扩容;

- 确认当前PVC状态是否是bound状态;

- 检查PVC是否来自于StorageClass且StorageClass的AllowVolumeExpansion是否为true;

- 检查Volume后端是否支持Resize:判断pv的类型,目前包括Glusterfs、Cinder、RBD、GCEPersistentDisk、AWSElasticBlockStore、AzureFile。

PersistentVolumeClaimResize只是验证了PVC Resize的有效性,如果K8S集群没有使用PersistentVolumeClaimResize认证,PVC的resize是否会直接造成底层的Resize? 分析了ExpandController的代码,发现它只是监听PVC Update事件,并且判断了PVC size以及当前状态等,并没有判断是否通过了PersistentVolumeClaimResize Plugin。这可能造成直接Resize操作。

apiserver 参数样例

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

[root@node131 manifests]# cat kube-apiserver.yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.182.131:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=192.168.182.131

- --allow-privileged=true

- --anonymous-auth=True

- --apiserver-count=1

- --authorization-mode=Node,RBAC

- --bind-address=0.0.0.0

- --client-ca-file=/etc/kubernetes/ssl/ca.crt

#- --enable-admission-plugins=NodeRestriction

- --enable-admission-plugins="NodeRestriction,PersistentVolumeClaimResize"

...

|

参考代码

kube apiserver 需要开启volume扩容插件

resize.PluginName, // PersistentVolumeClaimResize

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

|

// AllOrderedPlugins is the list of all the plugins in order.

var AllOrderedPlugins = []string{

admit.PluginName, // AlwaysAdmit

autoprovision.PluginName, // NamespaceAutoProvision

lifecycle.PluginName, // NamespaceLifecycle

exists.PluginName, // NamespaceExists

scdeny.PluginName, // SecurityContextDeny

antiaffinity.PluginName, // LimitPodHardAntiAffinityTopology

limitranger.PluginName, // LimitRanger

serviceaccount.PluginName, // ServiceAccount

noderestriction.PluginName, // NodeRestriction

nodetaint.PluginName, // TaintNodesByCondition

alwayspullimages.PluginName, // AlwaysPullImages

imagepolicy.PluginName, // ImagePolicyWebhook

podsecuritypolicy.PluginName, // PodSecurityPolicy

podnodeselector.PluginName, // PodNodeSelector

podpriority.PluginName, // Priority

defaulttolerationseconds.PluginName, // DefaultTolerationSeconds

podtolerationrestriction.PluginName, // PodTolerationRestriction

exec.DenyEscalatingExec, // DenyEscalatingExec

exec.DenyExecOnPrivileged, // DenyExecOnPrivileged

eventratelimit.PluginName, // EventRateLimit

extendedresourcetoleration.PluginName, // ExtendedResourceToleration

label.PluginName, // PersistentVolumeLabel

setdefault.PluginName, // DefaultStorageClass

storageobjectinuseprotection.PluginName, // StorageObjectInUseProtection

gc.PluginName, // OwnerReferencesPermissionEnforcement

resize.PluginName, // PersistentVolumeClaimResize

runtimeclass.PluginName, // RuntimeClass

certapproval.PluginName, // CertificateApproval

certsigning.PluginName, // CertificateSigning

certsubjectrestriction.PluginName, // CertificateSubjectRestriction

defaultingressclass.PluginName, // DefaultIngressClass

// new admission plugins should generally be inserted above here

// webhook, resourcequota, and deny plugins must go at the end

mutatingwebhook.PluginName, // MutatingAdmissionWebhook

validatingwebhook.PluginName, // ValidatingAdmissionWebhook

resourcequota.PluginName, // ResourceQuota

deny.PluginName, // AlwaysDeny

}

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

| const (

// PluginName is the name of pvc resize admission plugin

PluginName = "PersistentVolumeClaimResize"

)

func (pvcr *persistentVolumeClaimResize) Validate(ctx context.Context, a admission.Attributes, o admission.ObjectInterfaces) error {

if a.GetResource().GroupResource() != api.Resource("persistentvolumeclaims") {

return nil

}

if len(a.GetSubresource()) != 0 {

return nil

}

pvc, ok := a.GetObject().(*api.PersistentVolumeClaim)

// if we can't convert then we don't handle this object so just return

if !ok {

return nil

}

oldPvc, ok := a.GetOldObject().(*api.PersistentVolumeClaim)

if !ok {

return nil

}

oldSize := oldPvc.Spec.Resources.Requests[api.ResourceStorage]

newSize := pvc.Spec.Resources.Requests[api.ResourceStorage]

if newSize.Cmp(oldSize) <= 0 {

return nil

}

if oldPvc.Status.Phase != api.ClaimBound {

return admission.NewForbidden(a, fmt.Errorf("Only bound persistent volume claims can be expanded"))

}

// Growing Persistent volumes is only allowed for PVCs for which their StorageClass

// explicitly allows it

if !pvcr.allowResize(pvc, oldPvc) {

return admission.NewForbidden(a, fmt.Errorf("only dynamically provisioned pvc can be resized and "+

"the storageclass that provisions the pvc must support resize"))

}

return nil

}

// Growing Persistent volumes is only allowed for PVCs for which their StorageClass

// explicitly allows it.

func (pvcr *persistentVolumeClaimResize) allowResize(pvc, oldPvc *api.PersistentVolumeClaim) bool {

pvcStorageClass := apihelper.GetPersistentVolumeClaimClass(pvc)

oldPvcStorageClass := apihelper.GetPersistentVolumeClaimClass(oldPvc)

if pvcStorageClass == "" || oldPvcStorageClass == "" || pvcStorageClass != oldPvcStorageClass {

return false

}

sc, err := pvcr.scLister.Get(pvcStorageClass)

if err != nil {

return false

}

if sc.AllowVolumeExpansion != nil {

return *sc.AllowVolumeExpansion

}

return false

}

|

controller相关代码 didn’t find a plugin capable of expanding the volume

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

volumePlugin, err := expc.volumePluginMgr.FindExpandablePluginBySpec(volumeSpec)

if err != nil || volumePlugin == nil {

msg := fmt.Errorf("didn't find a plugin capable of expanding the volume; " +

"waiting for an external controller to process this PVC")

eventType := v1.EventTypeNormal

if err != nil {

eventType = v1.EventTypeWarning

}

expc.recorder.Event(pvc, eventType, events.ExternalExpanding, fmt.Sprintf("Ignoring the PVC: %v.", msg))

klog.Infof("Ignoring the PVC %q (uid: %q) : %v.", util.GetPersistentVolumeClaimQualifiedName(pvc), pvc.UID, msg)

// If we are expecting that an external plugin will handle resizing this volume then

// is no point in requeuing this PVC.

return nil

}

|

nfs-provisioner deploy说明

deployment说明

部署

1

2

3

4

5

6

7

8

9

10

| kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/rbac.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/deployment.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/class.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

kubectl get pv

kubectl get pvc

|

部署信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

|

[root@node131 nfs]# kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/deployment.yaml

serviceaccount/nfs-provisioner created

service/nfs-provisioner created

deployment.apps/nfs-provisioner created

[root@node131 nfs]# ls

custom-nfs-busybox-rc.yaml custom-nfs-pv.yaml nfs-busybox-rc.yaml nfs-pvc.yaml nfs-server-rc.yaml nfs-web-service.yaml test

custom-nfs-centos-rc.yaml custom-nfs-server-rc.yaml nfs-data nfs-pv.png nfs-server-service.yaml provisioner

custom-nfs-pvc.yaml deploy nfsmount.conf nfs-pv.yaml nfs-web-rc.yaml README.md

[root@node131 nfs]# kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/class.yaml

storageclass.storage.k8s.io/example-nfs created

[root@node131 nfs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

example-nfs example.com/nfs Delete Immediate false 43s

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl describe sc

Name: example-nfs

IsDefaultClass: No

Annotations: <none>

Provisioner: example.com/nfs

Parameters: <none>

AllowVolumeExpansion: <unset>

MountOptions:

vers=4.1

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pv

No resources found

[root@node131 nfs]# kubectl get pvc

No resources found in default namespace.

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

persistentvolumeclaim/nfs created

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs Bound pvc-26703096-84df-4c18-88f5-16d0b09be156 1Mi RWX example-nfs 3s

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-26703096-84df-4c18-88f5-16d0b09be156 1Mi RWX Delete Bound default/nfs example-nfs 5s

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs Bound pvc-26703096-84df-4c18-88f5-16d0b09be156 1Mi RWX example-nfs 20s

[root@node131 nfs]# kubectl describe pvc

Name: nfs

Namespace: default

StorageClass: example-nfs

Status: Bound

Volume: pvc-26703096-84df-4c18-88f5-16d0b09be156

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: example.com/nfs

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 1Mi

Access Modes: RWX

VolumeMode: Filesystem

Used By: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ExternalProvisioning 27s (x2 over 27s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "example.com/nfs" or manually created by system administrator

Normal Provisioning 27s example.com/nfs_nfs-provisioner-66ccf9bc7b-jpm2w_9633218c-812f-4e94-b77e-9f922ec2edb6 External provisioner is provisioning volume for claim "default/nfs"

Normal ProvisioningSucceeded 27s example.com/nfs_nfs-provisioner-66ccf9bc7b-jpm2w_9633218c-812f-4e94-b77e-9f922ec2edb6 Successfully provisioned volume pvc-26703096-84df-4c18-88f5-16d0b09be156

[root@node131 nfs]#

[root@node131 nfs]# kubectl describe pv

Name: pvc-26703096-84df-4c18-88f5-16d0b09be156

Labels: <none>

Annotations: EXPORT_block:

EXPORT

{

Export_Id = 1;

Path = /export/pvc-26703096-84df-4c18-88f5-16d0b09be156;

Pseudo = /export/pvc-26703096-84df-4c18-88f5-16d0b09be156;

Access_Type = RW;

Squash = no_root_squash;

SecType = sys;

Filesystem_id = 1.1;

FSAL {

Name = VFS;

}

}

Export_Id: 1

Project_Id: 0

Project_block:

Provisioner_Id: 1dbb40c3-3fd8-412d-94d8-a7b832bd98d3

kubernetes.io/createdby: nfs-dynamic-provisioner

pv.kubernetes.io/provisioned-by: example.com/nfs

Finalizers: [kubernetes.io/pv-protection]

StorageClass: example-nfs

Status: Bound

Claim: default/nfs

Reclaim Policy: Delete

Access Modes: RWX

VolumeMode: Filesystem

Capacity: 1Mi

Node Affinity: <none>

Message:

Source:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 10.233.14.76

Path: /export/pvc-26703096-84df-4c18-88f5-16d0b09be156

ReadOnly: false

Events: <none>

[root@node131 nfs]#

[root@node131 ~]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-provisioner-66ccf9bc7b-jpm2w 1/1 Running 0 2m49s

kube-system calico-kube-controllers-65b86747bd-c4qsp 1/1 Running 16 47d

kube-system calico-node-lglh4 1/1 Running 18 47d

kube-system coredns-8677555d68-jwggm 1/1 Running 4 6d

kube-system kube-apiserver-node131 1/1 Running 16 47d

kube-system kube-controller-manager-node131 1/1 Running 17 47d

kube-system kube-proxy-mktp9 1/1 Running 16 47d

kube-system kube-scheduler-node131 1/1 Running 17 47d

kube-system nodelocaldns-lfjzs 1/1 Running 16 47d

[root@node131 ~]#

|

查看下nfs挂载目录信息

1

2

3

4

5

6

7

8

9

10

11

|

[root@node131 k8s_pv_pvc]# ll /srv/

总用量 16

-rw-r--r--. 1 root root 4960 2月 8 10:48 ganesha.log

-rw-------. 1 root root 36 2月 8 10:46 nfs-provisioner.identity

drwxrwsrwx. 2 root root 6 2月 8 10:51 pvc-26703096-84df-4c18-88f5-16d0b09be156

drwxr-xr-x. 3 root root 19 2月 8 10:46 v4old

drwxr-xr-x. 3 root root 19 2月 8 10:46 v4recov

-rw-------. 1 root root 921 2月 8 10:51 vfs.conf

|

卸载删除

1

2

3

4

5

6

7

8

9

10

|

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/class.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/deployment.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/rbac.yaml

|

测试验证

存储写操作

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| kind: Pod

apiVersion: v1

metadata:

name: write-pod

spec:

containers:

- name: write-pod

image: busybox

imagePullPolicy: IfNotPresent

command:

- "/bin/sh"

args:

- "-c"

- "touch /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nfs

|

pod使用nfs pvc写操作,即往挂载路径/srv/pvc-idxxxxx/ 写

1

| kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/write-pod.yaml

|

1

2

3

4

5

| [root@node131 srv]# cd pvc-26703096-84df-4c18-88f5-16d0b09be156/

[root@node131 pvc-26703096-84df-4c18-88f5-16d0b09be156]# ll

总用量 0

-rw-r--r--. 1 root root 0 2月 8 11:16 SUCCESS

|

存储读操作

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

| kind: Pod

apiVersion: v1

metadata:

name: read-pod

spec:

containers:

- name: read-pod

image: busybox

imagePullPolicy: IfNotPresent

command:

- "/bin/sh"

args:

- "-c"

- "test -f /mnt/SUCCESS && exit 0 || exit 1"

volumeMounts:

- name: nfs-pvc

mountPath: "/mnt"

restartPolicy: "Never"

volumes:

- name: nfs-pvc

persistentVolumeClaim:

claimName: nfs

|

pod使用nfs pvc读操作,即往挂载路径/srv/pvc-idxxxxx/ 读

1

| kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/read-pod.yaml

|

pod运行情况

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| [root@node131 pvc-26703096-84df-4c18-88f5-16d0b09be156]# kubectl get po -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-provisioner-66ccf9bc7b-jpm2w 1/1 Running 0 38m

default read-pod 0/1 Completed 0 7s

default write-pod 0/1 Completed 0 8m43s

kube-system calico-kube-controllers-65b86747bd-c4qsp 1/1 Running 16 47d

kube-system calico-node-lglh4 1/1 Running 18 47d

kube-system coredns-8677555d68-jwggm 1/1 Running 4 6d1h

kube-system kube-apiserver-node131 1/1 Running 16 47d

kube-system kube-controller-manager-node131 1/1 Running 17 47d

kube-system kube-proxy-mktp9 1/1 Running 16 47d

kube-system kube-scheduler-node131 1/1 Running 17 47d

kube-system nodelocaldns-lfjzs 1/1 Running 16 47d

|

业务pod使用pvc时,删除pvc

业务pod状态为complete状态,进行delete pvc操作

此时命令会阻塞,pvc状态为保护过程中的Terminating

1

2

3

4

5

6

7

8

9

| [root@node131 nfs]# kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

persistentvolumeclaim "nfs" deleted

^C

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs Terminating pvc-26703096-84df-4c18-88f5-16d0b09be156 1Mi RWX example-nfs 39m

|

无业务pod使用pvc时,删除pvc

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| [root@node131 nfs]# kubectl delete po write-pod

pod "write-pod" deleted

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nfs Terminating pvc-26703096-84df-4c18-88f5-16d0b09be156 1Mi RWX example-nfs 41m

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl delete po read-pod

pod "read-pod" deleted

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pvc

No resources found in default namespace.

[root@node131 nfs]#

|

上面阻塞的delete pvc操作,会删除pvc,同时由于pv的delete回收策略,该pvc对应的存储挂载目录也会删除

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| [root@node131 nfs]# kubectl get pvc

No resources found in default namespace.

[root@node131 nfs]#

[root@node131 nfs]#

[root@node131 nfs]# kubectl get pv

No resources found

[root@node131 nfs]#

[root@node131 srv]# ll

总用量 16

-rw-r--r--. 1 root root 5140 2月 8 11:32 ganesha.log

-rw-------. 1 root root 36 2月 8 10:46 nfs-provisioner.identity

drwxr-xr-x. 3 root root 19 2月 8 10:46 v4old

drwxr-xr-x. 3 root root 19 2月 8 10:46 v4recov

-rw-------. 1 root root 667 2月 8 11:32 vfs.conf

[root@node131 srv]#

|

配额测试

部署pod

1

2

3

4

5

6

7

|

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/test_pod/custom-nfs-busybox-rc.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/test_pod/nfs-web-rc.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/test_pod/nfs-web-service.yaml

|

挂载目录下的数据情况,新增了index.html

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| [root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]# ll -h

总用量 4.0K

-rw-r--r--. 1 root root 611 2月 8 14:15 index.html

-rw-r--r--. 1 root root 0 2月 8 11:57 SUCCESS

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]# cat index.html

Mon Feb 8 06:14:38 UTC 2021

nfs-busybox-54846

Mon Feb 8 06:14:39 UTC 2021

nfs-busybox-8fqcr

Mon Feb 8 06:14:44 UTC 2021

|

测试pvc的容量为1M,在挂载目录/srv/pvc-4f32a250-f6da-4534-80fd-196221b555d9下,写入个2M大小的文件。查看测试pod是否还能继续写入数据,观察可知,在nfs provisoner的默认参数下,测试pod还能继续往挂载目录中写入数据。index.html大小由11k新增到了14k并继续增加

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| [root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]# ll -h

总用量 2.1M

-rw-r--r--. 1 root root 11K 2月 8 14:28 index.html

-rw-r--r--. 1 root root 0 2月 8 11:57 SUCCESS

-rw-r--r--. 1 root root 2.0M 2月 8 14:25 tmp.2M

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]# pwd

/srv/pvc-4f32a250-f6da-4534-80fd-196221b555d9

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]# ll -h

总用量 2.1M

-rw-r--r--. 1 root root 14K 2月 8 14:31 index.html

-rw-r--r--. 1 root root 0 2月 8 11:57 SUCCESS

-rw-r--r--. 1 root root 2.0M 2月 8 14:25 tmp.2M

[root@node131 pvc-4f32a250-f6da-4534-80fd-196221b555d9]#

|

目录挂载情况,有2个写pod和2个读pod共4个业务pod在运行

1

2

3

4

5

6

7

8

9

10

|

[root@node131 2129b202-2d91-400f-b04e-5e57f9c105b6]# mount |grep pvc

10.233.14.76:/export/pvc-4f32a250-f6da-4534-80fd-196221b555d9 on /var/lib/kubelet/pods/69448210-b1c1-4444-8c24-29024770acff/volumes/kubernetes.io~nfs/pvc-4f32a250-f6da-4534-80fd-196221b555d9 type nfs4 (rw,relatime,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.233.14.76,local_lock=none,addr=10.233.14.76)

10.233.14.76:/export/pvc-4f32a250-f6da-4534-80fd-196221b555d9 on /var/lib/kubelet/pods/337827e0-4924-4afb-b41e-a19c522d59d6/volumes/kubernetes.io~nfs/pvc-4f32a250-f6da-4534-80fd-196221b555d9 type nfs4 (rw,relatime,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.233.14.76,local_lock=none,addr=10.233.14.76)

10.233.14.76:/export/pvc-4f32a250-f6da-4534-80fd-196221b555d9 on /var/lib/kubelet/pods/888dd122-e529-4f36-bca4-828667c997dd/volumes/kubernetes.io~nfs/pvc-4f32a250-f6da-4534-80fd-196221b555d9 type nfs4 (rw,relatime,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.233.14.76,local_lock=none,addr=10.233.14.76)

10.233.14.76:/export/pvc-4f32a250-f6da-4534-80fd-196221b555d9 on /var/lib/kubelet/pods/0298070d-66e2-43e1-947c-d4ae0f5fab4b/volumes/kubernetes.io~nfs/pvc-4f32a250-f6da-4534-80fd-196221b555d9 type nfs4 (rw,relatime,vers=4.1,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.233.14.76,local_lock=none,addr=10.233.14.76)

[root@node131 2129b202-2d91-400f-b04e-5e57f9c105b6]#

|

删除测试pod,保留pv和pvc,检查挂载目录仍然存在。此时挂载目录大小>2M

再重新部署测试pod,发现部署成功,说明pod使用pvc请求容量大小时并不检查挂载目录pvc要求数据大小。

实际上挂载了整个容量大小,如下图。

1

2

3

4

5

|

[root@node131 srv]# df -hT |grep pvc

文件系统 类型 容量 已用 可用 已用% 挂载点

10.233.14.76:/export/pvc-4f32a250-f6da-4534-80fd-196221b555d9 nfs4 17G 11G 6.8G 61% /var/lib/kubelet/pods/06940ab3-4d60-4015-8c39-3bb15b331e7f/volumes/kubernetes.io~nfs/pvc-4f32a250-f6da-4534-80fd-196221b555d9

|

考虑开启配额参数

删除原有 nfs_provisioner,修改 nfs_provisioner参数后,部署

1

2

3

4

5

6

7

8

9

10

11

12

13

| kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/rbac.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/xfs_deployment.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/class.yaml

kubectl create -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

sleep 10s

kubectl get pv

kubectl get pvc

|

nfs_provisioner启动失败,需要系统开启xfs配额功能

在本地虚拟机环境未操作生效成功。。。

卸载删除

1

2

3

4

5

6

7

8

9

10

11

12

|

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/claim.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/class.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/xfs_deployment.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/rbac.yaml

|

删除pod

1

2

3

4

5

6

7

|

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/test_pod/nfs-web-service.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/test_pod/nfs-web-rc.yaml

kubectl delete -f deploy/kubernetes_incubator_nfs_provisioner/test_pod/custom-nfs-busybox-rc.yaml

|

nfs-client-provisioner

如果集群系统中已有存储系统服务,则可使用nfs-subdir-external-provisioner项目组件来提供动态pv支持

Kubernetes NFS-Client Provisioner

NFS subdir external provisioner is an automatic provisioner that use your existing and already configured NFS server to support dynamic provisioning of Kubernetes Persistent Volumes via Persistent Volume Claims. Persistent volumes are provisioned as ${namespace}-${pvcName}-${pvName}.

nfs-subdir-external-provisioner

make container

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| # export GOPATH=/home/wangb/projects

export GO111MODULE=on

# export GO111MODULE=off

# go env -w GOPROXY=https://goproxy.cn,direct

# 进入项目目录

# 下载依赖

go mod tidy

# 生成项目vendor

go mod vendor

# fix bug : gcr.io/distroless/static:latest pull failed

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/distroless/static:latest

## 镜像制作

# 基础镜像

curl -s https://zhangguanzhang.github.io/bash/pull.sh | bash -s -- gcr.io/distroless/static:latest

# 制作

make container

# 镜像名称和标签

# `nfs-subdir-external-provisioner:latest` will be created.

|

配置文件

deployment

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

| apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

# image: gcr.io/k8s-staging-sig-storage/nfs-subdir-external-provisioner:v4.0.0

image: nfs-subdir-external-provisioner:latest

imagePullPolicy: "IfNotPresent"

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.11.54

- name: NFS_PATH

value: /mnt/inspurfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.11.54

path: /mnt/inspurfs

|

storage class

1

2

3

4

5

6

7

8

9

| apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

pathPattern: "${.PVC.namespace}/${.PVC.annotations.nfs.io/storage-path}" # waits for nfs.io/storage-path annotation, if not specified will accept as empty string.

archiveOnDelete: "false"

|

pvc

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: test-claim

annotations:

nfs.io/storage-path: "test-path" # not required, depending on whether this annotation was shown in the storage class description

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

|

部署

1

2

3

4

5

6

7

8

9

|

kubectl create -f custom_deploy/rbac.yaml

kubectl create -f custom_deploy/deployment.yaml

kubectl create -f custom_deploy/class.yaml

kubectl create -f custom_deploy/test-claim.yaml -f custom_deploy/test-pod.yaml

kubectl create -f custom_deploy/run-test-pod.yaml

|

问题结论

- PV共用机制,是否可以做到超分?是否可以有用户的概念?

- nfs的pvc 能否控制住大小?

- pvc可以request使用量大小,但不是pvc和k8s来控制大小,实际上通过nfs的配额参数和xfs文件系统的存储配额参数设置实现

- k8s只是通过pv和pvc管理存储信息,并通过kubelet的volume manager对存储目录进行挂载和卸载操作

- PV对多个Pod使用时,能否控制总量?

- pod不直接使用pv,而是通过pv声明pvc方式来绑定pv使用

- 目前,如果不通过storageclass的动态方式(手动创建pv),或者storageclass中nfs-provision不使用配额参数,则无法实现存储总量控制。

- PV是否能在线更新,比如扩容?

无论是手动创建pv还是动态创建pv,如果直接修改pv(如,pv的Capacity从1M调整到2M),修改生效。但之前已创建的pvc的Capacity并没有发生变化(仍是原来的1M)。

如果通过修改pvc来更新存储资源pv的配置。需使用storageclass方式可以实现pvc->pv的容量关联扩容。只有动态供应的pvc可以调整大小,供应pvc的存储类型必须支持调整大小。即满足如下条件:

- Kube-ApiServer 参数:PersistentVolumeClaimResize插件 使能

- StorageClass 配置yaml的allowVolumeExpansion设置为true

- 在官方文档对StorageClass扩容支持的存储类型范围内

nfs无法通过通过pvc的resize扩容操作,来自动关联修改pv

- 如果底层存储出问题,k8s是否能够感知管理,故障恢复。

- 底层存储依赖于具体存储组件(如:nfs)实现的异常处理。或考虑把nfs组件封装成nfs-server + nfs-provisioner 当做k8s集群中的pod管理起来。

参考

附录

配置

StorageClass

class.yaml

1

2

3

4

5

6

7

| kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: example-nfs

provisioner: example.com/nfs

mountOptions:

- vers=4.1

|

PersistentVolumeClaim

claim.yaml

1

2

3

4

5

6

7

8

9

10

11

| kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs

spec:

storageClassName: example-nfs

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Mi

|

dd命令 构造指定大小文件

1

2

3

4

| # 从/dev/null每次读取1G数据,读5次,写入tmp.5G这个文件

# dd if=/dev/zero of=tmp.5G bs=1G count=5

dd if=/dev/zero of=tmp.2M bs=1M count=2

|

- if=FILE : 指定输入文件,若不指定则从标注输入读取。这里指定为/dev/zero是Linux的一个伪文件,它可以产生连续不断的null流(二进制的0)

- of=FILE : 指定输出文件,若不指定则输出到标准输出

- bs=BYTES : 每次读写的字节数,可以使用单位K、M、G等等。另外输入输出可以分别用ibs、obs指定,若使用bs,则表示是ibs和obs都是用该参数

- count=BLOCKS : 读取的block数,block的大小由ibs指定(只针对输入参数)

开启xfs的quota特性

1

2

3

4

5

6

7

8

| #什么结果都没有,这个表示没有设置配额

xfs_quota -x -c 'report' /

mount -o remount,rw,uquota,prjquota /

# 在开始划分分区的时候就要让分区的配额生效,添加一块硬盘作为docker的数据目录

#fdisk -l | grep sdb

#Disk /dev/sdb: 53.7 GB, 53687091200 bytes, 104857600 sector

|

编辑/etc/fstab

vi /etc/fstab

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| [root@node131 nfs]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Dec 17 15:27:09 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

#/dev/mapper/centos_master-root / xfs defaults 0 0

/dev/mapper/centos_master-root / xfs defaults,usrquota,grpquota 0 0

UUID=d13f3d45-3ac2-4cda-b1ce-715d3153a900 /boot xfs defaults 0 0

|

注,类型如下:

卸载并重新挂载

1

2

3

4

5

6

| #umount /home

#mount -a

#由于挂载了 /目录,采用重启操作

rebot now

|

2.2.3 检查

1

2

3

4

|

# mount | grep home

mount | grep centos

|

1

2

3

4

| [root@node131 ~]# mount | grep centos

/dev/mapper/centos_master-root on / type xfs (rw,relatime,seclabel,attr2,inode64,noquota)

[root@node131 ~]#

|

结果:在本地虚拟机环境未生效,操作未成功。。。

错误

新项目代码无法进行镜像制作

make container报错信息见下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

| [root@node1 nfs-ganesha-server-and-external-provisioner-master]# make container

./release-tools/verify-go-version.sh "go"

fatal: Not a git repository (or any parent up to mount point /home)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

mkdir -p bin

echo '' | tr ';' '\n' | while read -r os arch suffix; do \

if ! (set -x; CGO_ENABLED=0 GOOS="$os" GOARCH="$arch" go build -a -ldflags ' -X main.version= -extldflags "-static"' -o "./bin/nfs-provisioner$suffix" ./cmd/nfs-provisioner); then \

echo "Building nfs-provisioner for GOOS=$os GOARCH=$arch failed, see error(s) above."; \

exit 1; \

fi; \

done

+ CGO_ENABLED=0

+ GOOS=

+ GOARCH=

+ go build -a -ldflags ' -X main.version= -extldflags "-static"' -o ./bin/nfs-provisioner ./cmd/nfs-provisioner

fatal: Not a git repository (or any parent up to mount point /home)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

docker build -t nfs-provisioner:latest -f Dockerfile --label revision= .

Sending build context to Docker daemon 69.19MB

Step 1/19 : FROM fedora:30 AS build

30: Pulling from library/fedora

401909e6e2aa: Pull complete

Digest: sha256:3a0c8c86d8ac2d1bbcfd08d40d3b757337f7916fb14f40efcb1d1137a4edef45

Status: Downloaded newer image for fedora:30

---> 177d5adf0c6c

Step 2/19 : RUN dnf install -y tar gcc cmake-3.14.2-1.fc30 autoconf libtool bison flex make gcc-c++ krb5-devel dbus-devel jemalloc-devel libnfsidmap-devel libnsl2-devel userspace-rcu-devel patch libblkid-devel

---> Running in b6cb5632e5a4

Fedora Modular 30 - x86_64 0.0 B/s | 0 B 04:00

Errors during downloading metadata for repository 'fedora-modular':

- Curl error (6): Couldn't resolve host name for https://mirrors.fedoraproject.org/metalink?repo=fedora-modular-30&arch=x86_64 [Could not resolve host: mirrors.fedoraproject.org]

Error: Failed to download metadata for repo 'fedora-modular': Cannot prepare internal mirrorlist: Curl error (6): Couldn't resolve host name for https://mirrors.fedoraproject.org/metalink?repo=fedora-modular-30&arch=x86_64 [Could not resolve host: mirrors.fedoraproject.org]

The command '/bin/sh -c dnf install -y tar gcc cmake-3.14.2-1.fc30 autoconf libtool bison flex make gcc-c++ krb5-devel dbus-devel jemalloc-devel libnfsidmap-devel libnsl2-devel userspace-rcu-devel patch libblkid-devel' returned a non-zero code: 1

make: *** [container-nfs-provisioner] Error 1

[root@node1 nfs-ganesha-server-and-external-provisioner-master]#

|

改用直接拉取镜像方式获得。

开启配额参数, nfs provisioner 启动报错

报错信息

1

| Error creating xfs quotaer! xfs path /export was not mounted with pquota nor prjquota

|

系统的挂载盘使用的是xfs文件系统的默认参数,没有开启配额功能

所以无法挂载成功

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

[root@node131 /]# mount | grep centos

/dev/mapper/centos_master-root on / type xfs (rw,relatime,seclabel,attr2,inode64,noquota)

[root@node131 /]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Thu Dec 17 15:27:09 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos_master-root / xfs defaults 0 0

UUID=d13f3d45-3ac2-4cda-b1ce-715d3153a900 /boot xfs defaults 0 0

|

pvc状态为pending

可能是nfs没有挂载成功,检查nfs挂载

1

2

3

4

5

6

7

8

9

10

|

[root@node61 wangb]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

test-claim Pending managed-nfs-storage 12m

---- ------ ---- ---- -------

Normal Provisioning 7m49s k8s-sigs.io/nfs-subdir-external-provisioner_nfs-client-provisioner-59b4c555d6-gl8pw_494f3aed-c583-4819-8af0-3fd2de70307f External provisioner is provisioning volume for claim "default/test-claim"

Normal ExternalProvisioning 108s (x26 over 7m49s) persistentvolume-controller waiting for a volume to be created, either by external provisioner "k8s-sigs.io/nfs-subdir-external-provisioner" or manually created by system administrator

|